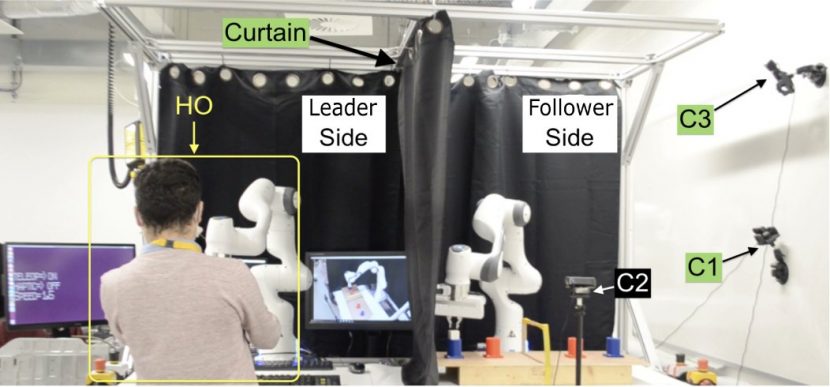

The Impact of Motion Scaling and Haptic Guidance on Operators’ Workload and Performance in Teleoperation

Congratulations to the LCAS team and the Intlab team for the publication of their paper “The Impact of Motion Scaling and Haptic Guidance on Operators’ Workload and Performance in Teleoperation“ within ACM CHI’22! The L-CAS team, in collaboration with intLab at the University of Lincoln, is pleased to announce the publication of our paper on…